Machine learning is what makes today’s robots feel less like rigid machines and more like adaptable coworkers. ML-powered robots are 40% faster at adapting to changes than traditional systems

ML-powered robots can adjust paths, recognize objects, or even predict failures before they happen. For instance, a machine learning robot can catch mistakes, learn from them, and get better with every attempt. That’s why self-driving cars can handle traffic, warehouse bots can reroute around blocked aisles, and drones can adapt mid-flight.

From educational robots teaching coding to adaptive robotics used in factories, the technology is shaping the next wave of automation.

What is machine learning in robotics?

Machine learning in robotics enables robots to learn from data and adapt their actions without explicit programming for every situation. It allows robots to infer patterns from data and update actions without new code.

In practice, this means a robot can spot patterns, predict outcomes, and fix mistakes on the fly. Picture a traditional robot arm that drops a phone case during assembly. It drops the next one. And the next. In contrast, a machine learning robot notices the pattern after the first drop, adjusts its grip pressure, and nails every case after that. Even if the shape changes slightly. No reprogramming needed.

Think of machine learning as the “hands-on” part of artificial intelligence. AI sets the broad decision-making framework, but ML handles the actual learning. Robotics brings this learning into the physical world, letting real machines see, adapt, and improve with every cycle.

How machine learning works in robots: The 3-step learning loop

Machine learning in robots works through a feedback loop. The robot collects data, trains on it, makes predictions, takes action, and then corrects itself based on results. This cycle allows the robot to improve with every attempt, rather than relying on hardcoded instructions.

Robots use three core methods of learning:

- Supervised learning trains on labeled examples, such as teaching a robot to recognize objects by showing it thousands of images.

- Unsupervised learning finds hidden patterns, like grouping parts by similarity without being told the categories.

- Reinforcement learning lets robots learn through trial and error, rewarding successful actions and discouraging failures.

A common example is a robotic arm learning to improve its grip. At first, it may drop an item, but over repeated trials, it adjusts pressure, angle, and speed until it masters the task. This process reflects how machine learning transforms robotics from rigid execution into adaptive performance.

Applications of machine learning in robotics

Applications of machine learning in robotics cover navigation, perception, decision-making, and adaptive control across industries.

Key ML methods and algorithms used in robotics

Key ML methods and algorithms used in robotics include supervised learning, unsupervised learning, reinforcement learning, deep learning models, and hybrid approaches that combine ML with classic control.

- Supervised learning: Robots learn from labeled datasets, such as images of parts with correct and defective labels. This method powers object recognition, scene segmentation, and pose estimation for assembly and inspection tasks.

- Unsupervised learning: Robots find patterns without labels, making it useful for clustering and anomaly detection. Variants like self-supervised learning let robots generate labels from raw sensor streams, reducing the need for manual data preparation.

- Reinforcement learning: Robots learn through trial and error, guided by rewards for correct actions. It is widely used in control and motion planning, helping robots improve at tasks like insertion, navigation, or balancing in dynamic environments.

- Deep learning and neural networks: Convolutional neural networks handle vision tasks, recurrent networks manage sequences, and transformer models capture relationships across multiple data types. These approaches make perception and decision-making more accurate and stronger.

- Hybrid approaches: Robots often combine ML with proven control systems. Classical algorithms guarantee stability, while ML adds adaptability for perception, calibration, and fine-tuned manipulation. This balance delivers safe yet flexible performance.

Challenges, limitations, and risks of machine learning in robotics

Challenges, limitations, and risks of machine learning in robotics stem from data demands, hardware constraints, safety issues, and ethical considerations.

- Data requirements: Robots need large, diverse, and accurately labeled datasets to train models. Inconsistent or biased data can lead to unreliable performance when robots operate in the real world.

- Hardware constraints: Machine learning models require significant compute power, high-quality sensors, and reliable energy sources. For example, training a single industrial robot's vision system requires specialized GPUs consuming 1,500 to 3,000 watts. That’s the same as running 15 to 30 high-end desktop computers simultaneously. Many compact or mobile robots cannot support these demands without sacrificing runtime or efficiency.

- Sim-to-real transfer problems: Robots often train in simulated environments, but transferring that knowledge to the real world is difficult. Small differences in lighting, friction, or sensor noise can cause failures.

- Safety and strength: Many ML models operate as “black boxes,” making their decisions hard to interpret. This lack of transparency raises concerns when robots are used in critical areas like healthcare or public spaces.

- Ethical and privacy concerns: Robots interacting with people may collect sensitive data such as images, speech, or biometrics. Without safeguards, this data could be misused, raising privacy and security risks.

Future trends and emerging directions in machine learning for robotics

Future trends and emerging directions in machine learning for robotics focus on making robots more autonomous, efficient, and collaborative.

- Greater autonomy: Robots will rely less on human supervision as ML models mature. From factory floors to self-driving vehicles, self-learning robots will handle more decision-making independently.

- On-device learning: Edge computing will allow robots to process and learn directly on their hardware. This reduces latency, saves bandwidth, and enables faster adaptation in real time.

- Multi-modal models: Combining vision, audio, and touch, robots will interpret complex environments more like humans. Multi-modal ML makes human-robot collaboration smoother and more intuitive.

- Integration with generative AI: Generative models will assist with planning, reasoning, and language-based interaction. Robots will be able to understand instructions in natural language and translate them into actions.

- Closer human-robot collaboration: ML will support safe, intuitive interfaces where robots and humans share tasks. This trend is already visible in adaptive robotics and will expand into more industries.

Case study: How Standard Bots is using ML in its products

.png)

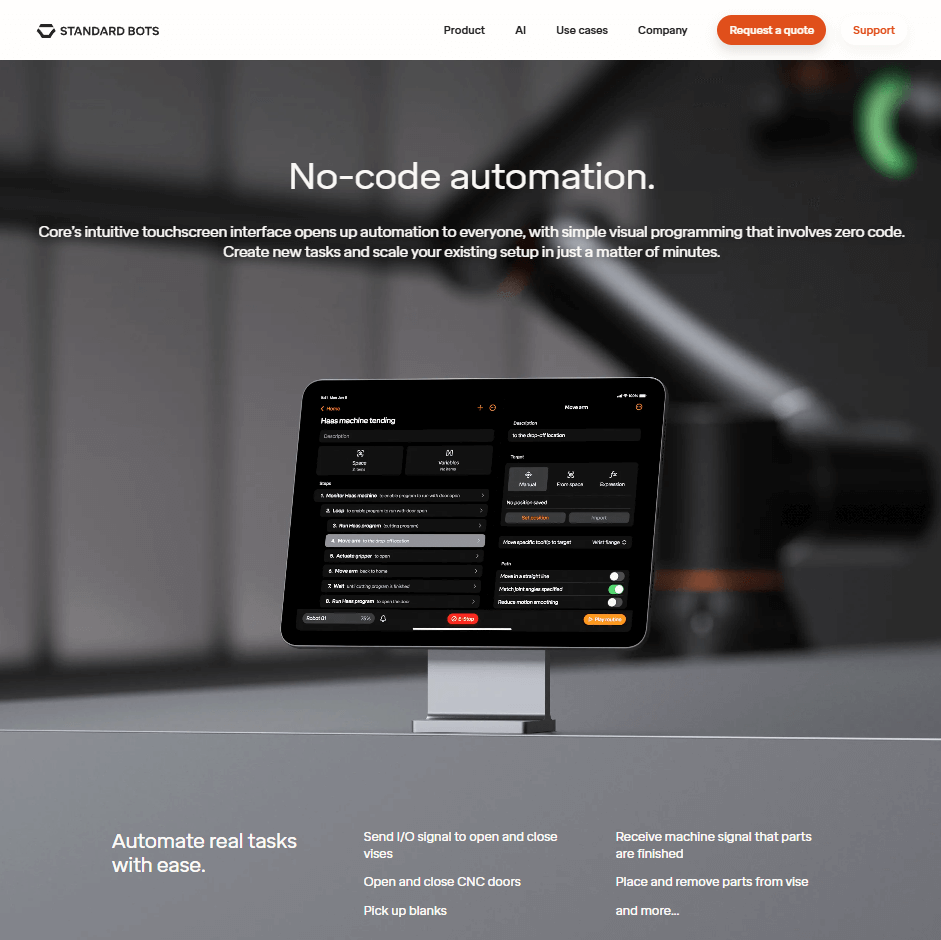

Standard Bots Core uses machine learning to identify parts and work areas with ML-driven perception and adjust motion in real time for safer, faster cycles. The built-in vision and sensors allow it to adjust in real time, whether it is tending CNC machines, handling delicate components, or working alongside people.

Core’s system refines its accuracy by learning from feedback loops, minimizing errors and downtime. It also supports no-code automation, so operators can adjust workflows without writing code. To bridge sim-to-real gaps, Core trains in simulation first and then fine-tunes on the floor, ensuring performance holds up under real lighting, friction, and noise.

Machine learning also supports safety and error detection. Standard Bots Core applies anomaly detection to prevent faults, while collision detection powered by ML ensures safe human-robot collaboration.

This mix of perception, anomaly detection, and collision avoidance shows how Standard Bots applies ML to raise uptime and safety.

Final thoughts

Machine learning in robotics turns rigid machines into adaptive systems. It works through methods like supervised, unsupervised, and reinforcement learning, backed by deep learning. These approaches enable navigation, quality control, predictive maintenance, and even healthcare robotics.

The benefits include smarter automation, safer collaboration, and reduced downtime. But challenges like data demands, hardware limits, and sim-to-real gaps still need attention.

Standard Bots Core shows how ML-driven perception, feedback loops, and no-code automation raise uptime and safety on the shop floor. The next step is to prioritize ML-ready features so your automation adapts instead of stalling.

Next steps with Standard Bots’ robotic solutions

Looking to upgrade your automation game? Standard Bots Thor is built for big jobs, while Core is the perfect six-axis cobot addition to your machine-tending cell, delivering unbeatable throughput and flexibility.

- Affordable and adaptable: Core costs $37K. Thor lists at $49.5K. Get high-precision automation at half the cost of comparable robots.

- Perfected precision: With a repeatability of ±0.025 mm, both Core and Thor handle even the most delicate tasks.

- Real collaborative power: Core's 18 kg payload conquers demanding palletizing jobs, and Thor's 30 kg payload crushes heavy-duty operations.

- AI-driven simplicity: Equipped with AI capabilities on par with GPT-4, Core and Thor integrate smoothly with CNC operations for advanced automation.

- Safety-first design: Machine vision and collision detection mean Core and Thor work safely alongside human operators.

Schedule your on-site demo with our engineers today and see how Standard Bots Core and Thor can bring AI-powered greatness to your shop floor.

FAQs

1. What is machine learning in robotics?

Machine learning in robotics is the use of algorithms that allow robots to learn from data, adapt to changes, and improve their performance through feedback. Unlike traditional robots that follow rigid preprogrammed instructions, ML-powered robots can recognize new objects, adjust when environments change, and refine their actions over time.

For example, a robotic arm can learn how to grip fragile items without breaking them by practicing and correcting its movements. This ability makes robots more flexible in unpredictable environments such as warehouses, hospitals, or research labs.

2. How is machine learning applied in robots today?

Machine learning is applied in robots across industries to perform tasks that require adaptability, precision, and decision-making. In logistics, autonomous mobile robots use ML for navigation, obstacle avoidance, and path planning.

In manufacturing, robotic arms rely on computer vision models to detect product defects during quality checks or to adjust their actions when components are misaligned.

Service and companion robots apply ML to interpret speech, gestures, and facial expressions, making human-robot interaction more natural. Even in predictive maintenance, ML models monitor sensors to anticipate breakdowns before they occur.

3. What role does deep learning play in robotics?

Deep learning plays a central role in robotics by powering perception and high-level decision-making. Convolutional neural networks allow robots to recognize objects, scenes, and even surface defects with remarkable accuracy, while recurrent networks handle time-based patterns such as sequences of movements.

More recently, transformer-based models have made it possible for robots to combine different types of data, such as vision, sound, and touch, into a single decision-making process. This multi-modal ability allows robots to operate in more complex, human-like ways.

4. What are the benefits of machine learning in robotics?

The benefits of machine learning in robotics include adapting to unstructured environments, reducing downtime through predictive insights, and continuously optimizing performance. For businesses, this means automation that improves output quality, lowers energy consumption, and speeds up production without increasing errors.

For workers, it means safer collaboration since ML allows robots to detect human presence and adjust movements accordingly. Beyond industrial use, ML-powered robots are already making advances in healthcare, where surgical systems use ML for precision, and in education, where educational robots adapt lessons to student responses.

5. What are the main challenges of using ML in robotics?

The main challenges of using ML in robotics revolve around data, hardware, and safety. Training an effective model requires huge volumes of labeled, diverse data that capture real-world variability. This is expensive and difficult to gather. High-performance processors and sensors are costly, consume significant power, and can limit how mobile robots operate.

Sim-to-real transfer also remains a hurdle, as models that work in simulation often fail when faced with real-world noise, lighting, or friction differences. Finally, many ML systems function as “black boxes,” which makes it difficult to understand why a robot made a certain decision.

6. What is the future of machine learning in robotics?

The future of machine learning in robotics is moving toward greater autonomy, intelligence, and human-robot collaboration. Robots will increasingly rely on self-supervised and unsupervised learning, reducing the need for costly labeled datasets.

Advances in edge computing will let robots process and learn directly on their hardware, speeding up responses and reducing reliance on cloud systems.

Multi-modal models will give robots the ability to combine visual, auditory, and tactile inputs, making them more intuitive partners in industries like manufacturing, healthcare, and even household use. Integration with generative AI will allow robots to plan complex tasks or understand natural language instructions with greater ease.

brighter future

Join thousands of creators

receiving our weekly articles.

.png)

.png)

.png)